Agentic Frameworks Explained: Building Intelligent AI Agents in 2025

You can pick from dozens of agentic frameworks now, each promising to help you build AI agents that reason, plan, and act autonomously. They connect LLMs to external tools, manage execution loops, and coordinate multi-agent workflows. But when the session ends, most agents forget everything. That's the difference between an agent that completes tasks and one that remembers your preferences, learns from past decisions, and gets better over time.

TLDR:

Agentic frameworks provide planning, tool integration, and orchestration for AI agents

Most frameworks lack persistent memory: agents restart from scratch each session

LangGraph excels at complex workflows; AutoGen handles multi-agent coordination reliably

Long-term memory cuts token costs by 90% and speeds responses by 91% versus full context

What Are Agentic Frameworks

Agentic frameworks are software libraries that let developers build AI systems capable of autonomous action. Unlike chatbots that respond to prompts, agentic AI breaks down complex goals into steps, decides which actions to take, uses external tools, and iterates toward solutions without constant human input.

These frameworks provide the scaffolding for planning loops, tool execution, and decision-making logic. They connect LLMs to databases, APIs, and other external systems, then let the agent process results and adjust its approach based on what happens. The agent can call functions, retrieve information, and chain actions together to complete multi-step tasks.

Agentic Framework Adoption and Market Growth

Organizations are deploying AI agents at scale. 79% report AI agent adoption, with 23% running agentic systems in production. The agentic AI market is projected to reach $199.05 billion by 2034.

Production deployments expose gaps that experiments hide. For example, agents that perform well in demos frequently break down under real-world load when tool calls become unreliable or slow. Without robust retry logic, timeout handling, and clear execution boundaries, a single failed API call can derail an entire agent workflow. This is why choosing the right agent development framework is important.

But framework maturity varies. LangGraph and AutoGen, for example, have production track records. Newer frameworks often lack error handling and observability. You should pick frameworks with active communities and proven patterns for the capabilities required today.

Now let's dig into these frameworks to help you decide on the one best suited to your use case.

Core Capabilities of Agentic Frameworks

Every agentic framework combines a set of core capabilities that determine what agents can do and how reliably they operate.

Planning and Reasoning

Frameworks implement reasoning loops that let agents decompose tasks into steps. The agent generates a plan, executes actions, assess results, and decides what to do next. Some frameworks use chain-of-thought prompting, while others implement tree search or reflection mechanisms to improve decision quality.

Tool Integration

Agents need access to external systems. Frameworks provide abstractions for defining functions the agent can call such as querying a database, hitting an API, or running code. The agent decides which tool to use based on context, executes it, and processes the response.

Orchestration and Control Flow

Orchestration determines how agents coordinate multiple actions. Some frameworks use sequential chains, others implement parallel execution or conditional branching. Graph-based systems let you define workflows where agents revisit previous steps or route tasks dynamically based on intermediate results.

Memory and State Management

Agents need to retain context across actions and sessions. Frameworks handle short-term working memory during task execution, but most lack built-in long-term memory. Persistent memory lets agents recall user preferences, past decisions, and learned information across conversations which reduces repeated context and improving personalization.

Memory Architecture in Agentic Systems

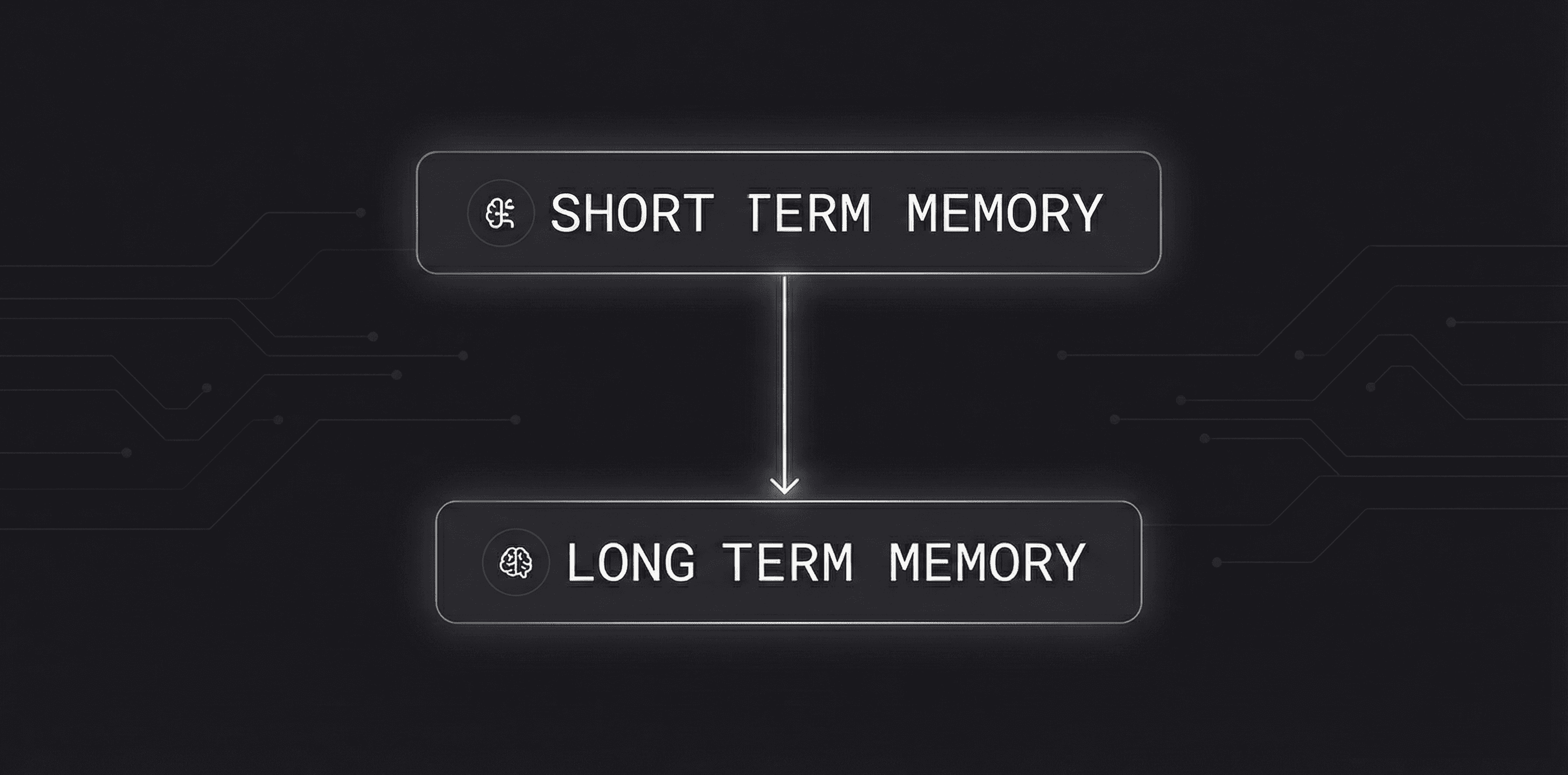

Most agentic frameworks conflate context windows with memory. A context window holds recent conversation turns during a session but resets when the task ends. Real memory persists across sessions and lets agents learn from past interactions. Real memory can be divided into two primary types of memory:

Short-term memory maintains state during task execution. The agent tracks which steps it completed, what tools it called, and intermediate results. This working memory disappears once the task finishes.

Long-term memory stores information across conversations. An agent with persistent memory recalls user preferences, past decisions, and learned context from weeks ago. This reduces repeated prompts and cuts token costs by eliminating redundant context in every request.

Few frameworks implement long-term memory natively. Most rely on external databases or leave it to developers. Without dedicated memory layers to retrieve relevant past interactions, agents restart from zero each session.

LangGraph: Graph-Based Workflow Control

LangGraph models agent workflows as state machines. You define nodes (actions or decisions) and edges (transitions between them), then let the agent traverse the graph based on runtime conditions. This structure supports cyclical flows where agents revisit steps, backtrack on errors, or loop until a condition is met. State management is explicit. Each node receives the current state, performs an action, and returns an updated state. The framework tracks state transitions across the graph, making it easier to debug multi-step processes.

LangGraph works well for applications requiring human-in-the-loop approval. You can pause execution at specific nodes, wait for human input, then resume. This is valuable in scenarios where agents need oversight before committing actions like sending emails or executing transactions.

When Should You Use LangGraph?

Choose LangGraph when workflows involve complex branching, conditional logic, or iterative refinement. If your agent needs to retry failed steps, gather feedback, and adjust its approach dynamically, graph-based control provides the structure to manage that complexity reliably.

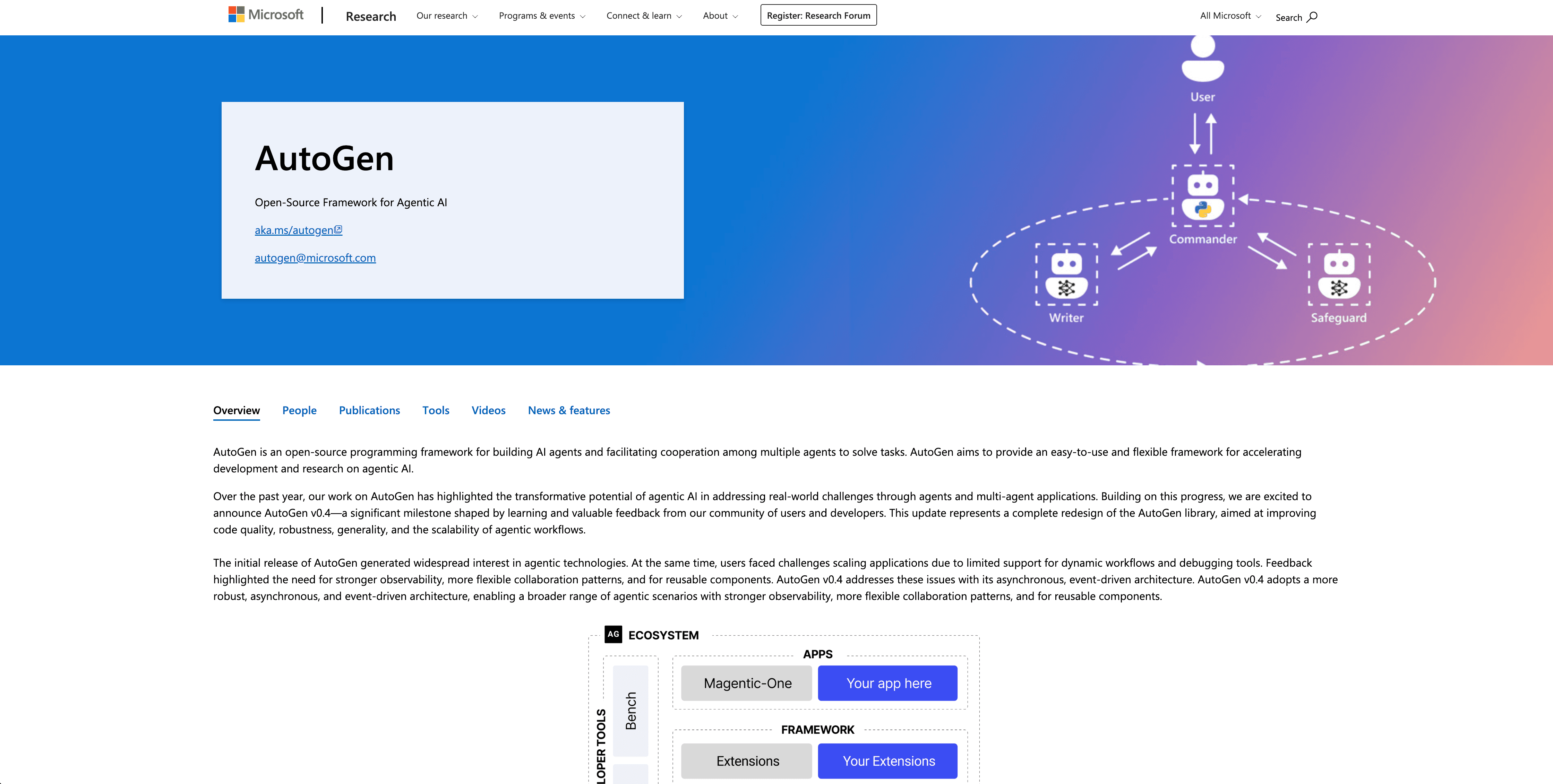

AutoGen: Conversation-Driven Multi-Agent Systems

AutoGen structures agent interactions through message passing. Agents exchange messages to coordinate work: each agent receives a message, processes it, and optionally replies. This pattern lets you compose multi-agent systems where specialized agents handle different parts of a task. The framework supports conversational patterns including two-agent chat, sequential group discussions, and nested conversations where one agent delegates subtasks to others. Agents can be human proxies, LLM-based assistants, or code executors that run scripts and return results.

AutoGen includes built-in error handling and retry logic. When tool calls fail or agents produce invalid outputs, the framework catches errors, prompts for corrections, and resumes execution. This makes it suitable for production environments where reliability is required.

When Should You Use AutoGen?

Use AutoGen when building enterprise systems that need auditability and structured collaboration between multiple specialized agents.

CrewAI: Role-Based Agent Teams

CrewAI assigns each agent a specific role, goal, and backstory. One agent handles research, another writes content, a third reviews output. You define the crew structure upfront, specify how agents collaborate, and the framework coordinates task delegation. This role-based model simplifies prototyping. Developers can map tasks to roles without designing state machines or message-passing protocols. Tasks flow sequentially or hierarchically through the crew based on dependencies you define.

When Should You Use CrewAI?

CrewAI works well for content generation pipelines, research workflows, and scenarios where agent responsibilities map cleanly to job functions. Choose it when speed of implementation matters more than fine-grained orchestration control.

OpenAI Swarm: Lightweight Agent Coordination

OpenAI Swarm is an open-source framework that handles multi-agent coordination through agent handoffs. One agent completes its task, then transfers control to another agent. Each agent specifies which agents it can route to and when. The framework uses minimal abstractions. You define agents as functions with instructions and available actions. Swarm manages routing based on runtime decisions.

When Should You Use OpenAI Swarm?

This experimental framework works for prototyping multi-agent patterns. It lacks persistence, error recovery, and orchestration logic needed for production systems. Use Swarm to test coordination patterns, not for deployments requiring reliability.

LangChain and Semantic Kernel: Ecosystem Integration

LangChain provides a library of integrations, pre-built chains, and retrieval components that connect to hundreds of data sources, LLM providers, and vector stores through standardized interfaces. The framework prioritizes composability: swap LLM providers, change retrieval strategies, or switch vector databases without rewriting application logic. This makes it a foundation for prototyping across different AI stacks.

Semantic Kernel targets .NET environments and Microsoft ecosystems. It provides first-class support for Azure OpenAI, Azure AI Search, and other Microsoft services. The architecture uses plugins and planners that align with C# conventions like dependency injection and async patterns.

Both frameworks handle RAG but treat memory as retrieval over static documents. They lack persistent memory that evolves across sessions. This limits their ability to build agents that adapt to user preferences, maintain conversation history across interactions, or personalize responses based on past behavior.

Choosing the Right Framework for Your Use Case

Framework selection depends on workflow complexity and team constraints. For example, LangGraph works well for applications requiring conditional branching, human approval steps, or iterative refinement while, for straightforward multi-agent collaboration with clear role definitions, CrewAI accelerates prototyping.

At the end of the day, team expertise shapes adoption speed. LangChain and Semantic Kernel integrate with existing data pipelines and offer extensive documentation, reducing ramp-up time for teams already using Python or .NET stacks. AutoGen requires understanding message-passing patterns but delivers reliability for production deployments.

So which framework should you choose? In short, you should assess integration requirements early. Check whether the framework supports your vector database, LLM provider, and deployment environment without custom adapters. Frameworks with active communities surface edge cases faster and provide battle-tested solutions for common problems.

Orchestration Patterns and Multi-Agent Coordination

In addition to building single agents with the framework, you may want to develop multi-agent coordination. In that use cases, you'll need to understand how your framework supports one or more different orchestration patterns:

Centralized coordinators route tasks from a single control point

Peer-to-peer patterns provide for direct agent communication.

Keep in mind a basic tenant about orchestration: choose centralized coordination for workflows requiring auditability and predictable paths. Use peer-to-peer patterns when agents need adaptive collaboration without predetermined routes.

Centralized Orchestration

A coordinator agent receives requests, delegates to specialized agents, and aggregates results. This approach simplifies debugging and provides clear audit trails for hierarchical workflows with predictable task dependencies.

Peer-to-Peer Orchestration

Agents negotiate directly and pass messages until task completion. AutoGen uses conversational patterns where agents exchange messages without a central router, allowing for more flexible collaboration.

Graph-Based Orchestration

LangGraph supports hybrid workflows where some nodes coordinate while others communicate peer-to-peer. This pattern handles complex scenarios requiring conditional routing and iterative refinement.

Production Deployment Challenges and Solutions

Deploying your agent into production will undoubtedly expose some issues. To help overcome these issues, you should log agent decisions, tool calls, and outputs from the start. In addition, track success rates by task type. Finally, when agents fail, capture the full context: inputs, intermediate steps, and final state. Gartner predicts 40% of agentic AI projects will fail by 2027, mostly due to weak evaluation pipelines and missing observability. Doing these simple things will help provide a clear path to remediating the issues.

In addition to those issues, production deployment brings up the challenges of infrastructure. The decisions you make about how you deploy your agent can affect both cost and latency. For example, if you decide to deploy with a persistent memory layer, you can probably cut token consumption by removing redundant context from every request. But, if you are also monitoring token usage and response times from day one, you can reveal performance bottlenecks before they scale.

Building Agentic AI with Memory Layers

Most agentic frameworks handle planning and orchestration but stop short of persistent memory. Without recall, agents restart from scratch each session. Developers compensate by feeding entire conversation histories into context windows, which scales poorly and wastes tokens on repeated context.

A dedicated memory layer stores and retrieves relevant context across sessions, as shown in this mem0 tutorial. When an agent queries memory instead of replaying full transcripts, token costs drop and response times improve. The agent recalls user preferences, past decisions, and learned information without reconstructing everything from logs. But, again, this is a decision much like the framework itself and what you choose to use, if any, for persistent memory will have an impact on how you can deploy into production and the infrastructure you'll need (as well as how it will scale).

Final thoughts on selecting agentic frameworks for production

You can build sophisticated agents with agentic frameworks, but planning and tool integration alone won't create personalized experiences. Mem0 provides the memory layer that lets agents recall context across sessions. Agents need persistent memory to recall context across sessions, not during task execution. When your agents remember user preferences and past interactions, token costs drop and responses feel less mechanical. The right framework handles orchestration, but memory is what makes agents adapt over time instead of starting fresh every conversation.

FAQ

What is the difference between context windows and persistent memory in agentic frameworks?

Context windows hold recent conversation turns during a session but reset when the task ends. Persistent memory stores information across conversations, letting agents recall user preferences, past decisions, and learned context from previous sessions without reconstructing everything from scratch each time.

How do I choose between LangGraph and AutoGen for my agent workflow?

LangGraph works best for workflows requiring conditional branching, human approval steps, or iterative refinement where agents need to revisit previous steps. AutoGen is better suited for enterprise systems that need auditability and structured collaboration between multiple specialized agents through message-passing patterns.

Why do most agentic frameworks lack built-in long-term memory?

Most frameworks conflate context windows with memory and focus on planning and orchestration instead of state persistence. They leave memory implementation to developers or rely on external databases, which means agents restart from zero each session unless you build custom memory solutions.

Can I add persistent memory to any agentic framework?

Yes, memory layers work with any framework architecture such as LangGraph, AutoGen, CrewAI, or custom systems. A dedicated memory layer handles storage and retrieval through simple API calls, letting you add cross-session recall without rebuilding your orchestration logic or switching frameworks.

When should I implement memory in my AI agent instead of using larger context windows?

Implement memory when you're feeding entire conversation histories into context windows, experiencing high token costs from repeated context, or need agents to recall information from weeks ago. Memory cuts token consumption and improves response times by retrieving only relevant past context instead of replaying full transcripts.

Subscribe To New Posts

Subscribe for fresh articles and updates. It’s quick, easy, and free.