Introducing OpenMemory MCP

OpenMemory is a local memory infrastructure powered by Mem0 that lets you carry your memory accross any AI app. It provides a unified memory layer that stays with you, enabling agents and assistants to remember what matters across applications.

Today, we’re launching the first building block: the OpenMemory MCP Server - a private, local-first memory layer with a built-in UI, compatible with all MCP-clients.

What is the OpenMemory MCP Server

The OpenMemory MCP Server is a private, local-first memory server that creates a shared, persistent memory layer for your MCP-compatible tools. This runs entirely on your machine, enabling seamless context handoff across tools. Whether you're switching between development, planning, or debugging environments, your AI assistants can access relevant memory without needing repeated instructions.

The OpenMemory MCP Server ensures all memory stays local, structured, and under your control with no cloud sync or external storage.

How the OpenMemory MCP Server Works

Built around the Model Context Protocol (MCP), the OpenMemory MCP Server exposes a standardized set of memory tools:

add_memories: Store new memory objectssearch_memory: Retrieve relevant memorieslist_memories: View all stored memorydelete_all_memories: Clear memory entirely

Any MCP-compatible tool can connect to the server and use these APIs to persist and access memory.

What It Enables

Cross-Client Memory Access: Store context in Cursor and retrieve it later in Claude or Windsurf without repeating yourself.

Fully Local Memory Store: All memory is stored on your machine. Nothing goes to the cloud. You maintain full ownership and control.

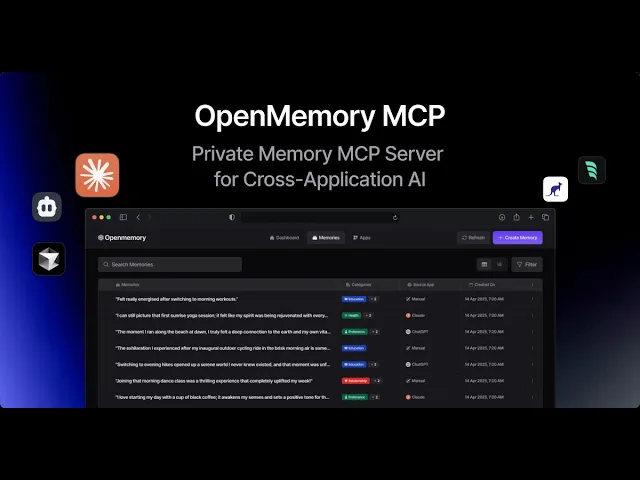

Unified Memory UI: The built-in OpenMemory dashboard provides a central view of everything stored. Add, browse, delete and control memory access to clients directly from the dashboard.

Supported Clients

The OpenMemory MCP Server is compatible with any client that supports the Model Context Protocol. This includes:

Cursor

Claude Desktop

Windsurf

Cline, and more.

As more AI systems adopt MCP, your private memory becomes more valuable.

Installation and Setup

Getting started with OpenMemory is straightforward and takes just a few minutes to set up on your local machine. Follow these steps:

Configure Your MCP Client

To connect Cursor, Claude Desktop, or other MCP clients, you'll need your user ID. You can find it by running:

Then add the following configuration to your MCP client (replace your-username with your username):

The OpenMemory dashboard will be available at http://localhost:3000. From here, you can view and manage your memories, as well as check connection status with your MCP clients.

Once set up, OpenMemory runs locally on your machine, ensuring all your AI memories remain private and secure while being accessible across any compatible MCP client.

See in action 🎥

We've put together a short demo to show how it works it practice:

Real-World Examples

Scenario 1: Cross-Tool Project Flow Define technical requirements of a project in Claude Desktop. Build in Cursor. Debug issues in Windsurf - all with shared context passed through OpenMemory.

Scenario 2: Preferences That Persist Set your preferred code style or tone in one tool. When you switch to another MCP client, it can access those same preferences without redefining them.

Scenario 3: Project Knowledge Save important project details once, then access them from any compatible AI tool, no more repetitive explanations.

Conclusion

The OpenMemory MCP Server brings memory to MCP-compatible tools without giving up control or privacy. It solves a foundational limitation in modern LLM workflows: the loss of context across tools, sessions, and environments.

By standardizing memory operations and keeping all data local, it reduces token overhead, improves performance, and unlocks more intelligent interactions across the growing ecosystem of AI assistants.

This is just the beginning. The MCP server is the first core layer in the OpenMemory platform - a broader effort to make memory portable, private, and interoperable across AI systems.

Getting Started Today

Read the documentation: https://docs.mem0.ai/openmemory

With OpenMemory MCP, your AI memories stay private, portable, and under your control, exactly where they belong.

OpenMemory: Your memories, your control.

Subscribe To New Posts

Subscribe for fresh articles and updates. It’s quick, easy, and free.