Add Persistent Memory to Claude Code with Mem0 (5-Minute Setup)

Claude Code is a phenomenal piece of technology. But it is affected by the same problem every LLM is affected by which is lack of memory.

I’ll walk you through the steps to add a persistent memory layer to Claude Code with Mem0, covering both CLI and desktop versions.

Why Add Memory to Claude Code?

Everytime you start a Claude Code session, you need to explain your project architecture, re-state your coding preferences, and re-describe bugs you’ve already fixed. This repetition wastes time and tokens.

I read a Hacker News discussion where a developer measured how the baseline task took 10-11 minutes with 3+ exploration agents launched. But with memory context injection, the same task was completed in 1-2 minutes with zero exploration agents.

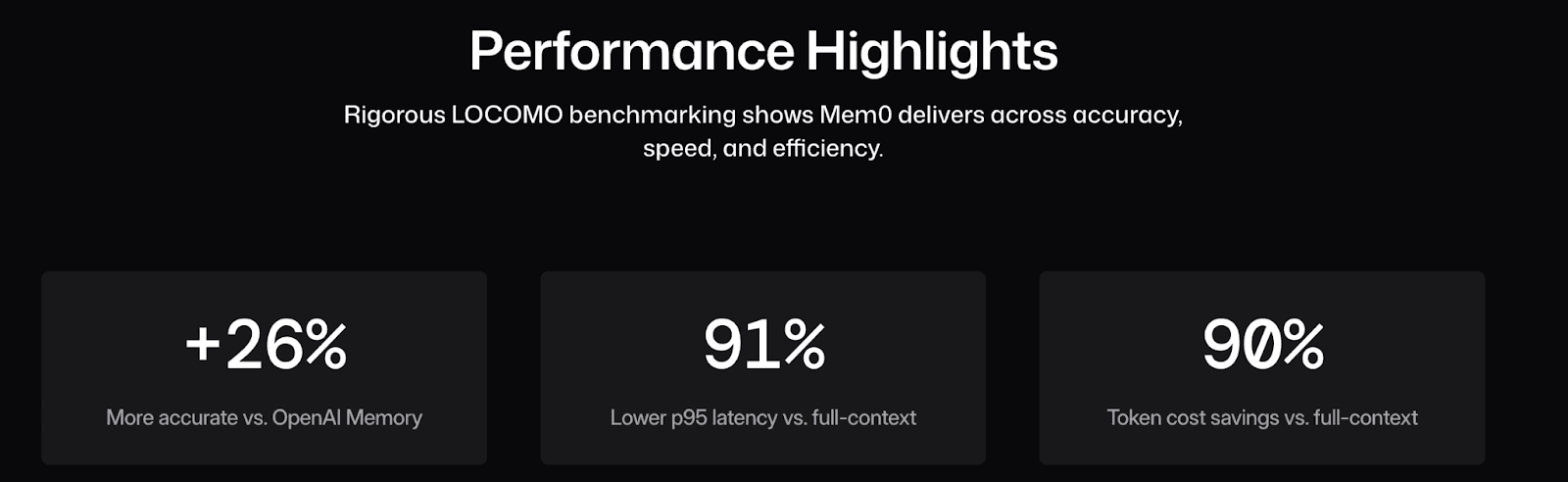

We saw similar results with our internal benchmarks.

When testing agents with and without memory, agents with Mem0 implementation showed 90% lower token usage and 91% faster responses compared to full-context approaches.

AI memory systems like Mem0 extract key facts from conversations, store them in searchable vector databases, and inject relevant context into future sessions automatically.

How To Implement Mem0 In Claude Code

You can integrate persistent memory in Claude Code using the official Mem0 MCP server. Here’s a walkthrough.

Prerequisites

Install the Mem0 Python SDK (requires Python 3.9+, recommend 3.10+): pip3 install mem0ai

Get your API key from app.mem0.ai. The free tier includes 10,000 memories and 1,000 retrieval calls per month.

The MCP (Model Context Protocol) approach works across both CLI and desktop versions with identical configuration. MCP is Anthropic's open standard for AI-tool integrations.

Step 1: Install the MCP server

First, we need to install the Mem0 MCP server. It’s available as a pip package.

Then, check where the package was installed using the below command:

Keep the path you see here noted somewhere, we’ll need it for the next step.

Step 2a: Configure for Claude Code CLI

Create or edit .mcp.json in your project root for team-shared configuration:

Set your API key as an environment variable or in your shell profile:

Step 2b: Configure for Claude Code Desktop

If you want the Mem0 MCP server to work with Claude Code Desktop, you’ll need to edit the ~/.claude.json file. Edit the JSON file with vim or another editor of your choice (is there another choice?) and add the mem0 server entry:

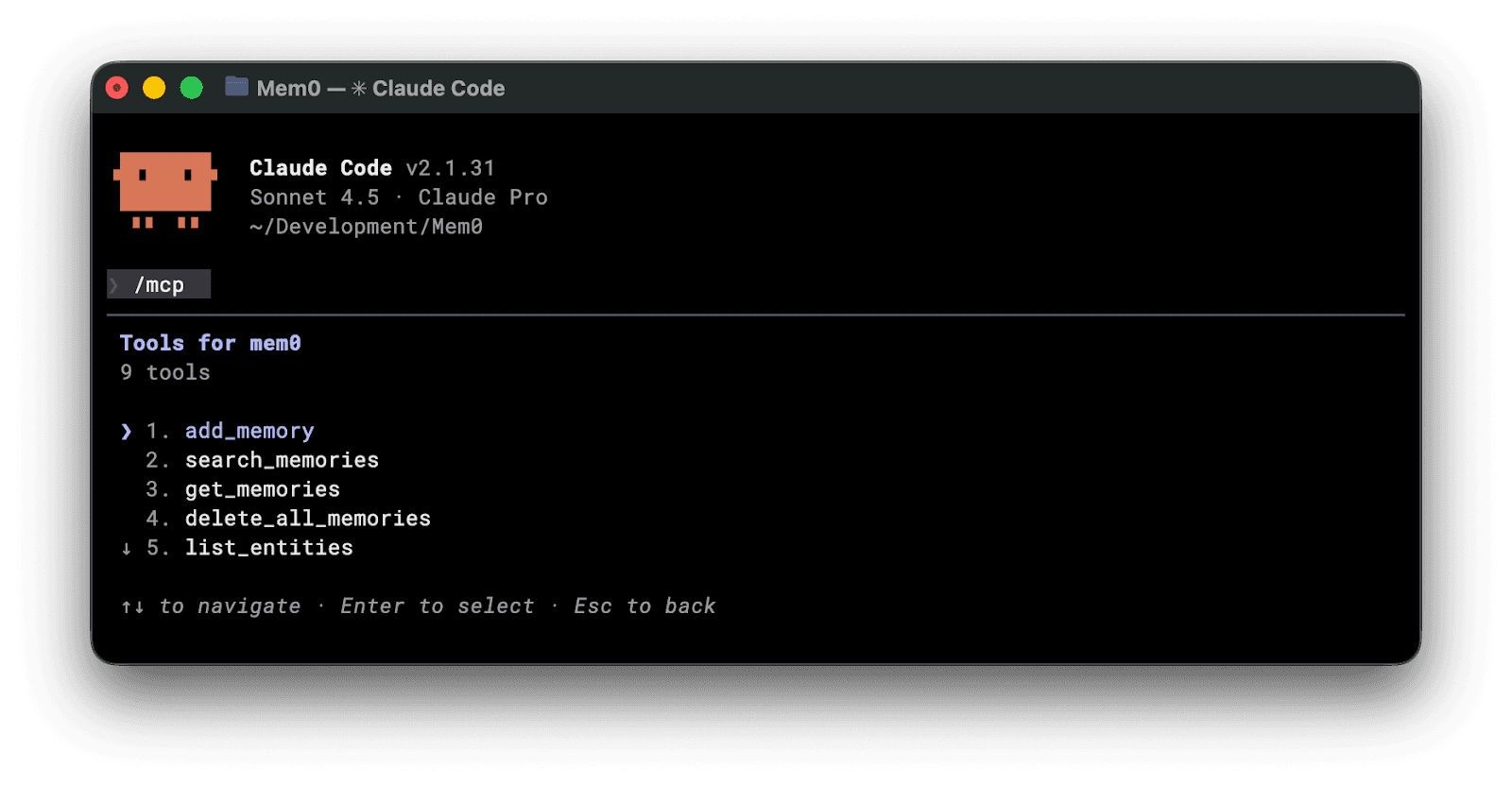

Once done, restart Claude Code. On the CLI app, run the /mcp command to see if the MCP server is connected.

For the desktop app, hit the plus icon and you should see the MCP listed.

Step 3: Enable graph memory (optional)

Depending on how you use Claude, you may need relationship-aware memory that tracks connections between entities. If you need that, add the following line in the MCP server configuration:

Mem0’s graph memory improves accuracy for multi-hop reasoning but requires the Pro plan ($249/month).

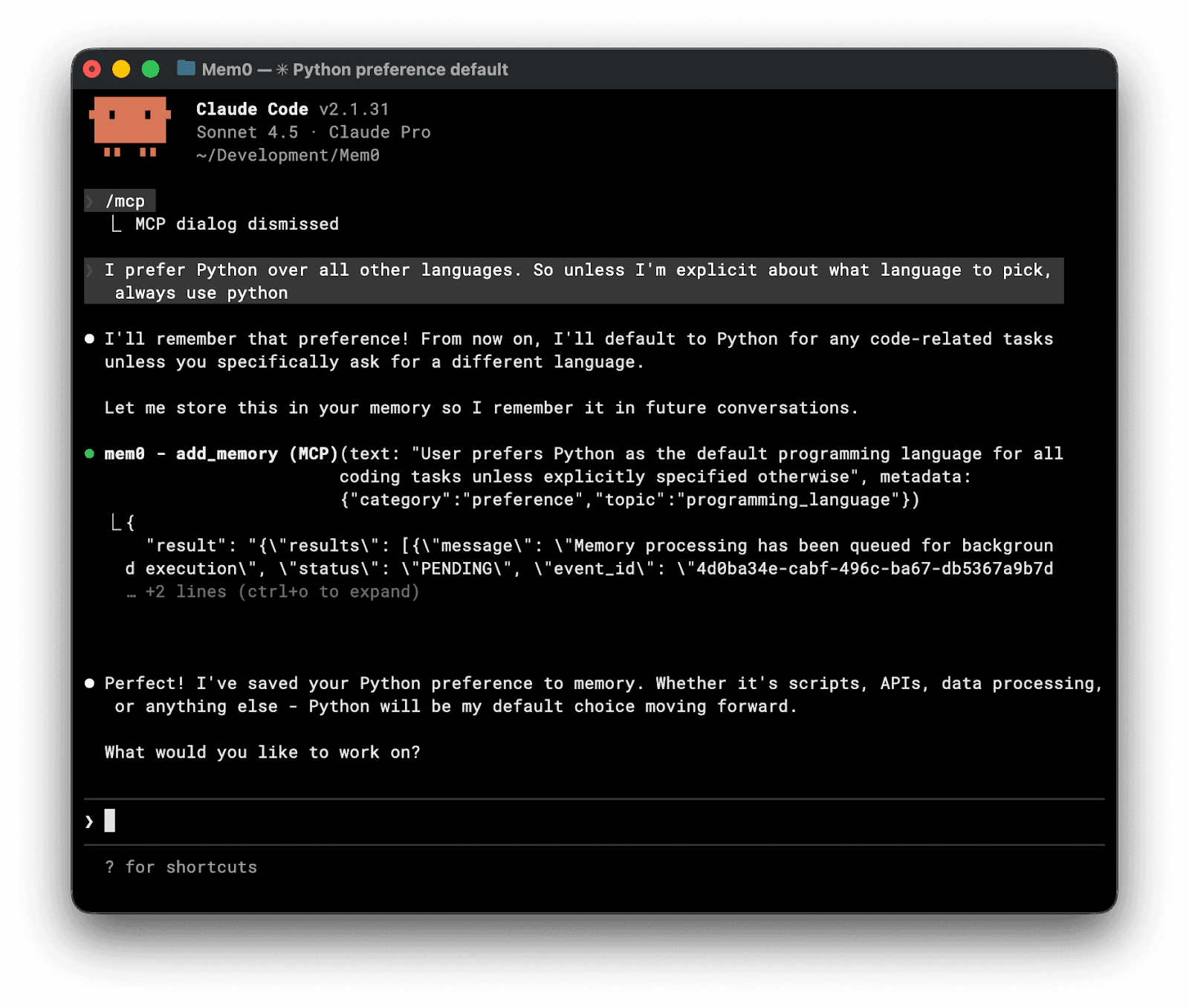

Step 4: Test Mem0 with Claude

Start chatting with Claude code while your Mem0 MCP is connected. It will automatically create new memories that will be inserted as part of the context whenever they’re needed.

That’s it. Next time you ask Claude to write code, it will fetch your memory and use that information to write code exactly as you prefer.

The best part is, memory turns your Claude Code into an ever evolving agent and after a while, it starts to feel so personal, it’s hard to use anything else.

Configuring CLAUDE.md For Memory Context

Claude Code automatically reads CLAUDE.md files at session start. You can use this setup to create a structured memory file at ~/.claude/CLAUDE.md for global preferences:

Create project-specific files at ./CLAUDE.md in each repository:

Mem0 MCP Tools Available After Setup

Once you configure the Mem0 MCP server, here are the tools that are available to Claude

Tool | Function |

|---|---|

| Store new memories with user/agent scope |

| Semantic search across stored memories |

| List all memories with optional filters |

| Modify existing memory by ID |

| Remove specific memory |

| Bulk delete by scope |

Use natural language to invoke: "Remember that this project uses PostgreSQL with Prisma" or "What do you know about our authentication setup?"

How Does Persistent Memory Improve Claude Code Workflows

If you’ve just implemented memory, you’re not going to see the benefits immediately. But let me show you a hypothetical demonstration of what your workflows would look like with and without memory implementation.

Without Memory: Debugging Authentication

Session 1: You explain the auth system uses NextAuth with Google and email providers, that tokens expire after 24 hours, and that the refresh logic lives in /lib/auth/refresh.ts. You debug an issue where tokens aren't refreshing properly.

Session 2: You re-explain the entire auth setup. Claude suggests checking token expiration, which you already know is 24 hours. You spend the first 10 minutes re-establishing context before making progress.

Session 3: The refresh bug resurfaces in a different form. You've forgotten the specific edge case you discovered in Session 1. You debug from scratch.

With Memory: Debugging Authentication

Session 1: Same debugging process, but Claude automatically stores: "Auth uses NextAuth with Google/email. Tokens expire 24h. Refresh logic in /lib/auth/refresh.ts. Found edge case: refresh fails when token expires during active request."

Session 2: When you ask a question like “Let’s continue on the auth logic fix” It asks directly: "Is this related to the token refresh edge case we found, where refresh fails during active requests?"

Session 3: Claude immediately recalls the edge case pattern and checks if the new issue follows the same pattern.

Code Preference Retention

Without memory: Every session, Claude generates code with its default style unless you’ve specified it in a static CLAUDE.md file. You repeatedly correct: "Use arrow functions" or "I prefer explicit return types” or have to edit the markdown initialization file.

With memory: You state preferences once. Claude stores: "Prefers arrow functions, explicit TypeScript return types, 2-space indent." Future sessions generate code matching your style from the first prompt.

How to Add Cross-Session Project Context to Claude Code with Mem0

Over time, you can instruct Claude to update CLAUDE.md with repeating patterns from conversations and from memory:

This context injection eliminated the "exploration phase" where Claude reads multiple files to understand project structure. Tasks that required 3+ exploration agents completed with zero exploration agents.

Why Mem0 as the AI Memory Layer For Claude Code

Mem0 is a universal, AI memory layer that extracts, stores, and retrieves contextual information across sessions. And it’s tried and trusted by developers, with our GitHub repository boasting over 46,000+ GitHub stars.

Mem0 uses a hybrid technical architecture: vector stores for semantic search, key-value stores for fast retrieval, and optional graph stores for relationship modeling.

On the LOCOMO benchmark, Mem0 shows +26% accuracy over OpenAI's memory implementation.

Mem0 offers both cloud-hosted and self-hosted AI memory deployment.

Self-hosted installations use Qdrant for vector storage by default, with support for 24+ vector databases including PostgreSQL (pgvector), MongoDB, Pinecone, and Milvus. LLM providers supported include OpenAI, Anthropic, Ollama, Groq, and 16+ others.

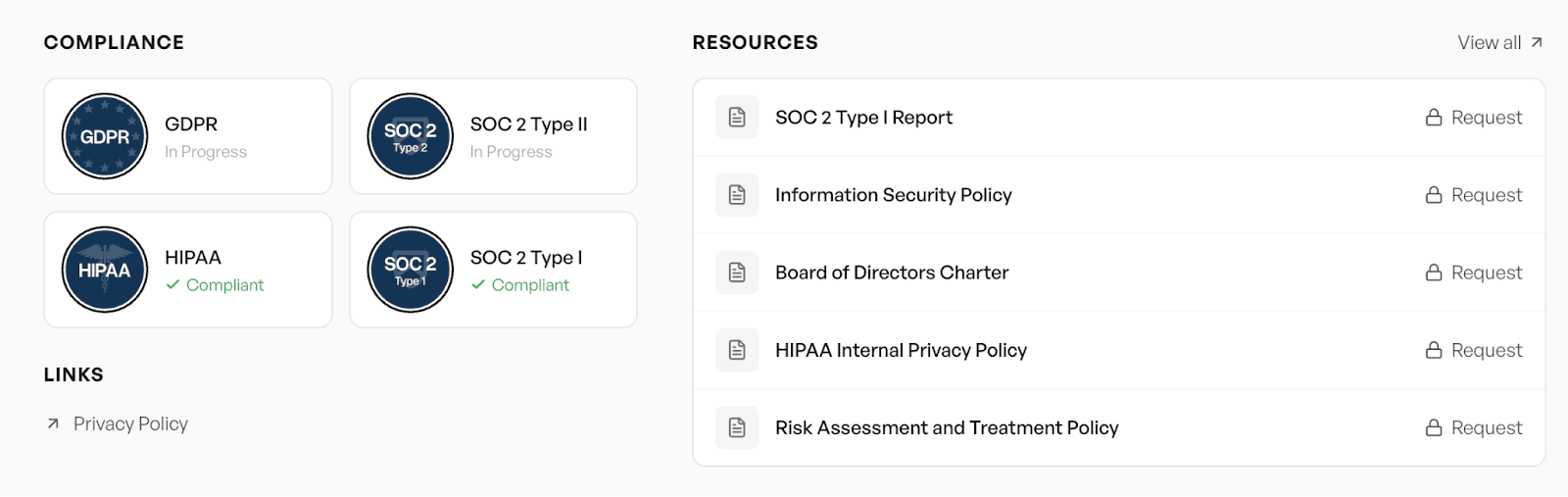

For compliance requirements, Mem0 is SOC 2 Type II certified, GDPR compliant, and offers HIPAA compliance on Enterprise plans. Bring Your Own Key (BYOK) support addresses data sovereignty concerns.

The Mem0 Python SDK provides async operations for high-throughput applications:

Memory scoping supports multiple organizational levels: user_id for personal memories, agent_id for bot-specific context, run_id for session isolation, and app_id for application-level defaults.

Try Mem0, The Persistent AI Memory Layer for Agents

Adding persistent memory to Claude Code turns it from a stateless tool into a context-aware development partner. The implementation takes less than 5 minutes using the Mem0 MCP server approach, with free tier limits sufficient for individual developers.

And you get 10x faster task completion for context-dependent work, 90% reduction in token usage, and elimination of the repetitive context-building phase that opens every session.

If you’re building AI-native development workflows, memory is the foundation that makes everything else work.

Frequently Asked Questions

1. Why does Claude Code need persistent memory?

Claude Code starts every session with zero context, forcing you to re-explain project architecture, coding preferences, and past debugging steps. Adding persistent memory eliminates this repetition, reducing token usage by 90% and speeding up task completion by allowing Claude to recall details from previous sessions immediately.

2. How do I add memory to Claude Code?

You can add memory by installing the Mem0 MCP server. The process involves installing the mem0-mcp-server package via pip, getting an API key from Mem0, and configuring your .mcp.json (for CLI) or ~/.claude.json (for Desktop) file with the server details and your API key.

3. Does this work with both Claude Code CLI and Claude Desktop?

Yes, the Mem0 MCP integration works for both. The configuration steps are nearly identical; you just need to update the specific JSON configuration file used by each interface (.mcp.json for CLI project scope or ~/.claude.json for the Desktop app).

4. Is Mem0 free to use?

Mem0 offers a free tier that includes 10,000 memories and 1,000 retrieval calls per month, which is sufficient for most individual developers. For advanced features like graph memory (relationship tracking), a Pro plan is available.

5. Can I control what Claude remembers?

Yes. You can manage memory using natural language commands or MCP tools. For example, you can tell Claude to "Remember that we use PostgreSQL" or use tools like delete_memory to remove outdated information. You can also configure scoping (user_id, agent_id) to isolate context.

Subscribe To New Posts

Subscribe for fresh articles and updates. It’s quick, easy, and free.